- Intel shared GPU Memory Benefits LLMS

- Expanded vram groups allow a softer execution of AI work loads

- Some games slow down when memory expands

Intel has added a new capacity to its ultra -core systems that echoes an previous AMD movement.

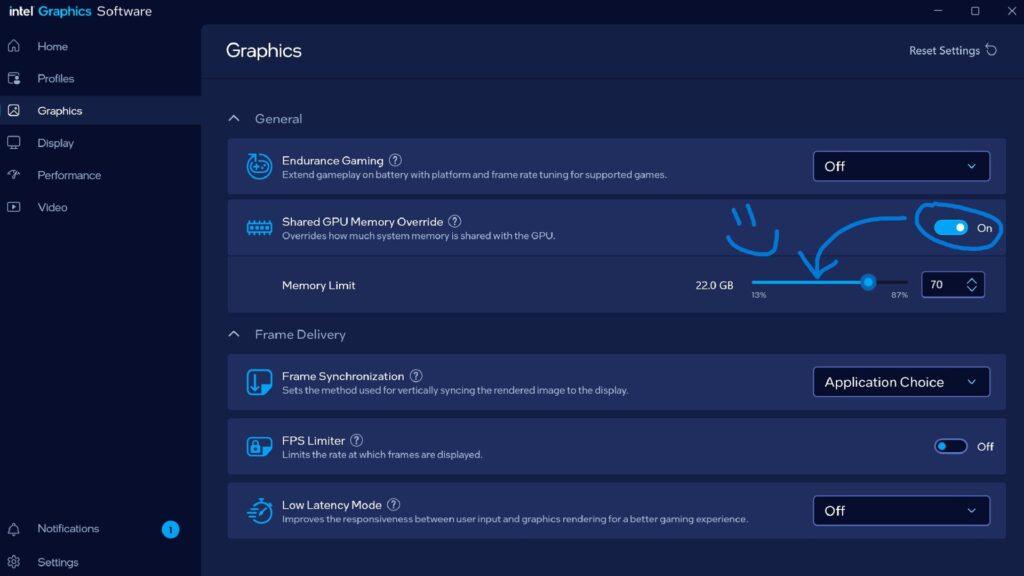

The feature, known as “shared GPU memory cancellation”, allows users to assign RAM of the additional system for use by integrated graphics.

This development is aimed at machines that are based on integrated solutions instead of discrete GPUs, a category that includes many compact laptops and mobile work station models.

Memory and performance allocation of games

Bob Duffy, who leads the graphics and evangelism of IA in Intel, confirmed the update and advised that the latest Intel Arc controllers are required to enable the function.

Change is presented as a way to improve system flexibility, particularly for users interested in AI tools and workloads that depend on memory availability.

The introduction of additional shared memory is not automatically a benefit for each application, since the tests have shown that some games can load larger textures if there is more available memory, which can actually make performance decrease instead of improving.

The “variable” memory memory “of AMD was largely framed as an improvement of the game, especially when combined with AFMF.

This combination allowed more game active to store directly in memory, which sometimes produced measurable profits.

Although the impact was not universal, the results varied according to the software in question.

Intel’s adoption of a comparable system suggests that it is interested in continuing to be competitive, although skepticism remains on how wide it will benefit everyday users.

While players can see mixed results, those who work with local models could gain more of the Intel approach.

Executing large language models locally is increasingly common, and these workloads are often limited by available memory.

When extending the RAM group available to integrated graphics, Intel is positioning its systems to handle larger models that would otherwise be limited.

This can allow users to download more than the model in VRM, reducing bottlenecks and improving stability when executing AI tools.

For researchers and developers without access to a discreet GPU, this could offer a modest but useful improvement.