- Populating a single one-gigawatt AI facility costs nearly $80 billion

- Planned industry-wide AI capacity could total 100 GW

- High-end GPU hardware should be replaced every five years without extension

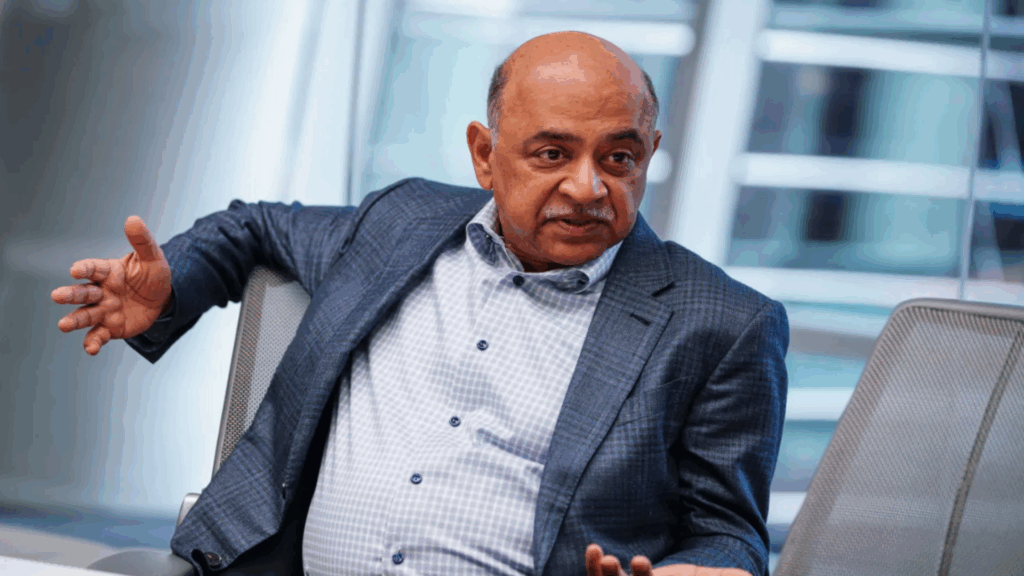

IBM CEO Arvind Krishna questions whether the current pace and scale of AI data center expansion can remain financially sustainable under existing assumptions.

He estimates that populating a single 1 GW site with computing hardware is now approaching $80 billion.

With public and private plans indicating nearly 100 GW of future capacity dedicated to advanced model training, the implied financial exposure increases to $8 trillion.

Economic Burden of Next Generation AI Sites

Krishna links this trajectory directly to the upgrade cycle that governs today’s accelerator fleets.

Most of the high-end GPU hardware deployed in these centers depreciates in about five years.

At the end of that period, operators do not extend the equipment but rather replace it entirely. The result is not a one-time capital hit, but rather a recurring obligation that compounds over time.

CPU resources are also still part of these deployments, but are no longer at the center of spending decisions.

The balance has shifted toward specialized accelerators that deliver massively parallel workloads at a pace unmatched by general-purpose processors.

This shift has materially altered the definition of scale for modern AI facilities and pushed capital requirements beyond what traditional enterprise data centers once demanded.

Krishna argues that depreciation is the factor most often misunderstood by market participants.

The pace of architectural change means that performance leaps come faster than financial paybacks can comfortably absorb.

Hardware that still works becomes economically obsolete long before its physical useful life ends.

Investors like Michael Burry raise similar questions about whether cloud giants can continue to extend asset lives as model sizes and training demands grow.

From a financial perspective, the burden no longer falls on energy consumption or land acquisition, but on the forced rotation of increasingly expensive hardware stacks.

In workstation-type environments, similar update dynamics already exist, but the scale is fundamentally different within hyperscale sites.

Krishna estimates that covering the capital cost of these multi-gigawatt campuses would require hundreds of billions of dollars in annual profits just to remain neutral.

That requirement is based on current hardware economics and not speculative long-term efficiency gains.

These projections come as major technology companies announce ever-larger AI campuses, measured not in megawatts but in tens of gigawatts.

Some of these proposals already rival the electricity demand of entire nations, raising parallel concerns about grid capacity and long-term energy pricing.

Krishna estimates that the chances of today’s LLMs achieving general intelligence on the next generation of hardware without a fundamental change in knowledge integration are close to zero.

That assessment frames the wave of investments as driven more by competitive pressure than a validated technological inevitability.

Interpretation is difficult to avoid. The expansion assumes that future revenues will increase to match unprecedented spending.

This is happening even as depreciation cycles shorten and energy limits tighten in multiple regions.

The risk is that financial expectations may be outpacing the economic mechanisms necessary to sustain them throughout the life cycle of these assets.

Through Tom Hardware

Follow TechRadar on Google News and add us as a preferred source to receive news, reviews and opinions from our experts in your feeds. Be sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp also.