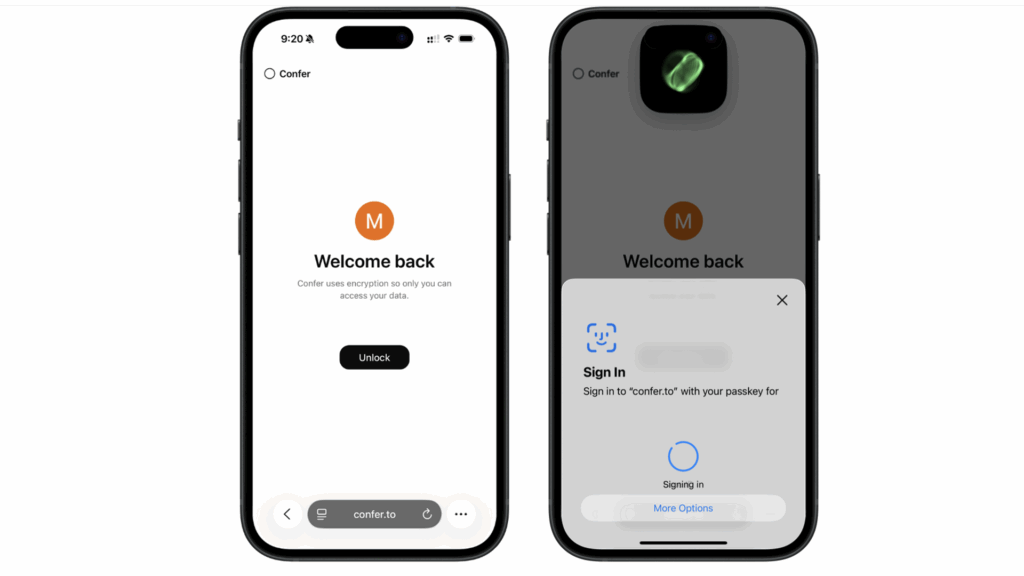

- There’s a new AI assistant created by the founder of Signal, called Confer

- Like Signal, Confer encrypts chats so no one can read them

- Unlike ChatGPT or Gemini, Confer does not collect or store your data for training, registration or legal access.

The man who popularized private messaging now wants to do the same with AI. Signal creator Moxie Marlinspike launched a new AI assistant called Confer, built around similar privacy principles.

Conversations with Confer cannot be read even by server administrators. The platform encrypts every part of user interaction by default and runs in what is called a trusted execution environment, without allowing sensitive user data to leave that encrypted bubble. No saved data is verified, used for training or sold to other companies. Confer is an outlier in this regard, as data is generally considered the value of making an AI chatbot free.

But with consumer trust in AI privacy already under pressure, the appeal is obvious. People are noticing that what they say to these systems is not always private. A court order last year forced OpenAI to retain all ChatGPT user records, even deleted ones, for potential legal discovery, and ChatGPT chats even appeared in Google search results for a time, thanks to accidental public links. There was also an uproar over contractors reviewing anonymous chatbot transcripts that included personal health information.

Confer data is encrypted before it even reaches the server, using access keys stored only on the user’s device. Those keys are never uploaded or shared. Confer supports syncing chats between devices, but thanks to cryptographic design options not even Confer creators can unlock them. It’s ChatGPT with Signal security.

Private AI

Confer’s design goes a step further than most privacy-first products by offering a feature called remote attestation. This allows any user to check exactly what code is running on Confer’s servers. The platform publishes the software stack in its entirety and digitally signs each version.

This may not matter to all users. But for developers, organizations and watchdogs trying to evaluate how their data is handled, it’s a radical level of security that could let some worried fans of AI chatbots breathe easier.

It’s not that there aren’t privacy settings on other AI chatbots. There are actually quite a few that users can review, even if they don’t plan to do so until they’ve already said something personal. ChatGPT, Gemini, and Meta AI all offer opt-out options for things like chat history, allowing you to use data for training or delete data entirely. But the default state is monitoring and opting out is the user’s responsibility.

Confer reverses that setting by making the most private setting the default. However, it is built in, which also highlights how most privacy tools are reactive. It could at least generate awareness, if not consumer demand, for more forgetting AI chatbots. Organizations like schools and hospitals interested in AI could be attracted to tools that ensure confidentiality by design.

Follow TechRadar on Google News and add us as a preferred source to receive news, reviews and opinions from our experts in your feeds. Be sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp also.

The best business laptops for every budget