I always enjoy the opportunity to get into the generators of ia videos. Even when they are terrible, they can be entertaining, and when they succeed, they can be incredible. Then, I was anxious to play with Runway’s new gen-4 model.

The company boasted that the GEN-4 (and its smaller and faster brother model, GEN-4 Turbo) can overcome the previous GEN-3 model in quality and consistency. Gen-4 supposedly nail the idea that the characters can and should resemble themselves between scenes, along with a more fluid movement and better environmental physics.

It is also supposed to be remarkably good in the following directions. You give a visual reference and some descriptive text, and produces a video that resembles what you imagined. In fact, it seemed a lot to how Operai promotes its own ia video creator, Sora.

Although Sora’s videos are generally beautiful, sometimes they are also unreliable in quality. A scene can be perfect, and the next one could have characters floating like ghosts or doors that lead anywhere.

Magic movie

Runway Gen-4 was launched as Magic Video, so I decided to try it in mind and see if I could make videos telling the story of a magician. I cooked some ideas for a small fantasy trilogy starring a wandering wizard. I wanted the wizard to meet an elf princess and then chase her through magical portals. Then, when he finds it again, she is disguised as a magical animal, and he transforms her into a princess.

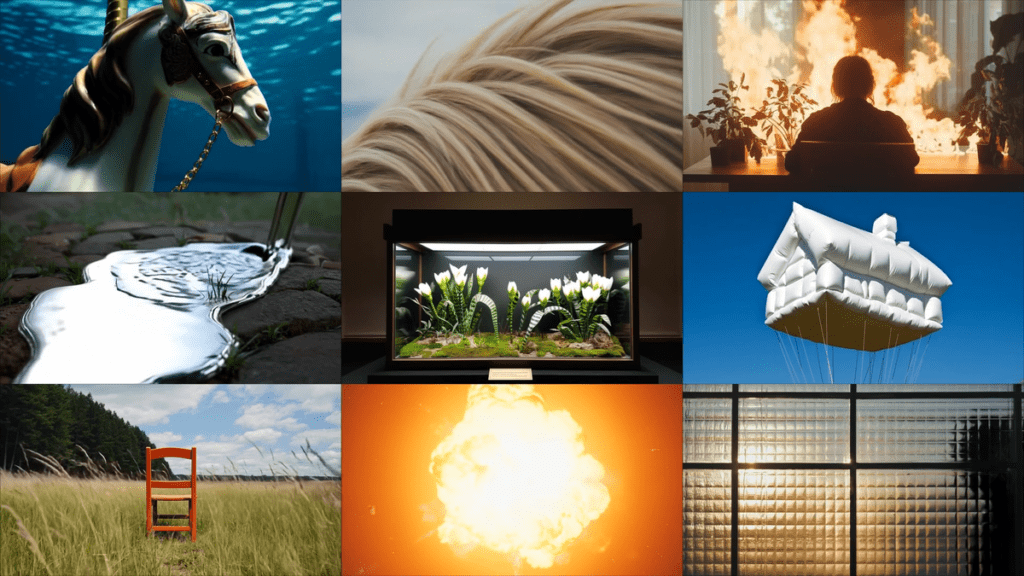

The goal was not to create a box office success. I just wanted to see how Gen-4 could stretch with a minimum entry. Not having photos of real wizards, I took advantage of the recently updated Chatgpt images generator to create convincing fixed images. It is possible that Sora is not flying to Hollywood, but I cannot deny the quality of some of the images produced by Chatgpt. I made the first video, then I used Runway’s option to “fix” a seed so that the characters are consistent in the videos. I found the three videos in a single movie below with a short break between each one.

Ai cinema

You can see that it is not perfect. There are some movements of odd objects, and the consistent appearance is not perfect. Some background elements shone strangely, and would not yet put these clips on a theater screen. However, the real movement, the expression and the emotion of the characters felt surprisingly real.

And, I liked the iteration options, which did not overwhelmed me with too many manual options, but it also gave me enough control to seem to be actively involved in creation and not only pressing a button and praying for coherence.

Now, will the many professional filmmakers of Sora and Openai break down? No, certainly not at this time. But at least I would experiment with that if I were an amateur filmmaker who wanted a relatively cheap way to see how some of my ideas would see. At least, before spending a ton of money on people, I needed to make movies look as powerful as my vision for a movie.

And if I felt comfortable enough with him and good enough to use and manipulate the AI to get what he wanted every time, do not even think about using Sora. It is not necessary to be a wizard to see that the spell track hopes to launch its possible user base.