- The songs generated by the deceased artists, such as Blaze Foley, have been falsely climbed to Spotify

- The transmission service is knocking them down while they are seen

- The clues went through the spotify content verification processes through platforms such as Soundon

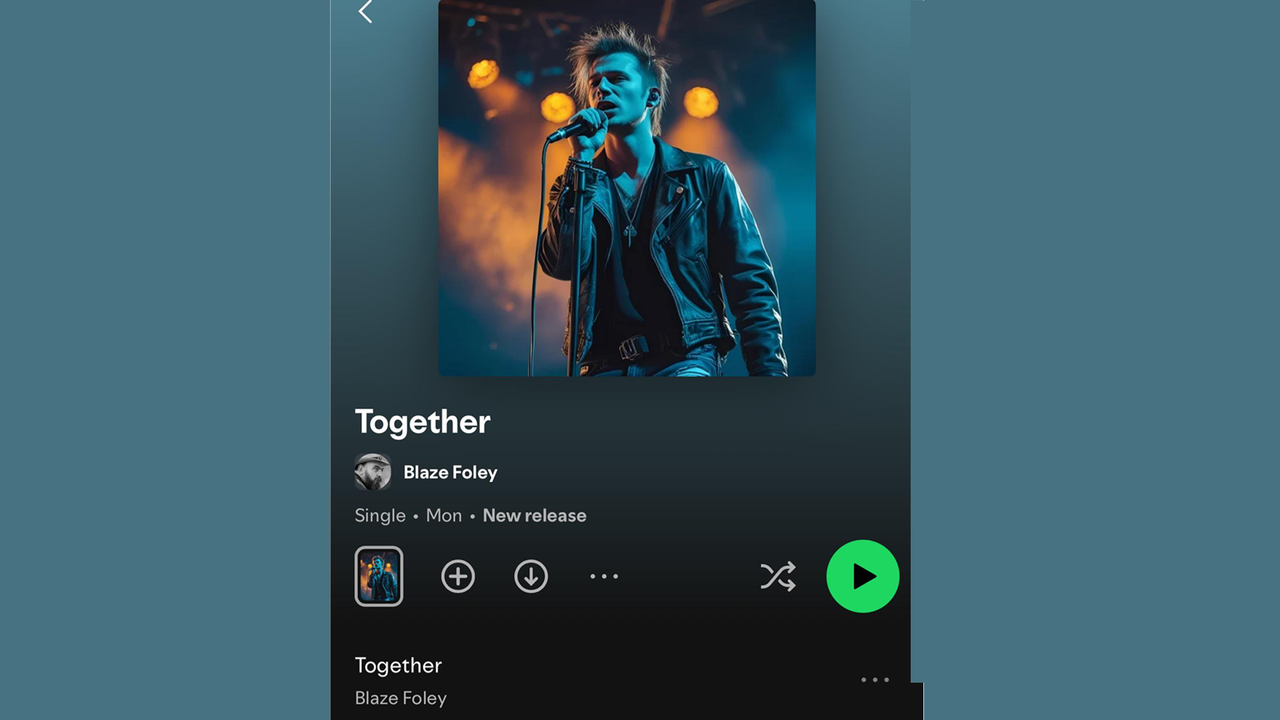

Last week, a new country song called “Together” appeared on Spotify under the official website of Blaze Foley, a country artist filmed and murdered in 1989. The ballad was different from his other work, but there it was: cover arts, credits and copyright information, just like any other new single. Except that this was not an unexpected track of before his death; It was a false generated by AI.

After being marked by fans and the seal of Foley, Lost Art Records, and informed by 404 Media, the track was eliminated. Another false song attributed to the Tardí Clark country icon, who died in 2016, was also shot down.

The report found that the tracks generated by AI carry copyright labels that list a company called syntax error as owner, although little is known about them. Tropeating through songs made of AI on Spotify is not unusual. There are complete lists of LO-FI rhythms of environmental machine guns and chillcore that already rake millions of plays. But, those clues are generally presented under the names of imaginary artists and their origin is generally mentioned.

Attribution is what makes the Foley case unusual. A song generated by the rise to the wrong place and falsely linked to real and deceased human beings is many steps beyond simply sharing sounds created in AI.

Synthetic music integrated directly into the legacy of long dead musicians without the permission of their families or labels is an escalation of the long data debate about the content generated by AI. Which happened on a giant platform like Spotify and was not caught by Streamer’s own tools is understandably worrying.

And unlike some cases in which the music generated by AI is passed as a tribute or experiment, these were treated as official releases. They appeared in the discographies of the artists. This last controversy adds the disturbing wrinkle of the real artists misrepresented by falsifications.

Póstumo artists

As for what happened at the end of Spotify, the company attributed the load to Soundon, a music distributor owned by Tiktok.

“The content in question violates Spotify deceptive content policies, which prohibits the impersonation to deceive, such as replicating the name, image or description of another creator, or impersonating a person, brand or organization in a deceptive way,” Spotify said in a statement at 404.

“This is not allowed. We take measures against licensors and distributors who cannot monitor this type of fraud and those who commit repeated or atrocious violations can and have been permanently eliminated from Spotify.”

That was eliminated is great, but the fact that the track appeared at all suggests a problem with the signage of these problems before. Taking into account that Spotify processes tens of thousands of new daily clues, the need for automation is obvious. However, that means that there can be no verification in the origins of a track as long as the technical requirements are met.

That matters not only for artistic reasons, but as a matter of ethics and economy. When the generative AI can be used to manufacture false songs in the name of dead musicians, and there is no immediate or infallible mechanism to stop it, then it should ask how artists can prove who they are and obtain the credit and royalties that they or their properties have earned.

Apple Music and YouTube have also had problems filtering the Deepfake content. And as the tools of AI like Suno and Udio make it easier than ever generating songs in seconds, with lyrics and voices to coincide, the problem will only grow.

There are verification processes that can be used, as well as the construction of labels and water marks in content generated by AI. However, platforms that prioritize simplified loads may not be fans of additional time and effort involved.

AI can be a great tool to help produce and improve music, but that is to use the as a tool, not as a mask. If an AI generates a track and is labeled as such, that is great. But if someone spends intentionally who works as part of an artist’s legacy, especially one who can no longer defend, that is fraud. It may seem a lower aspect of AI debates, but people are concerned about music and what happens in this industry could have repercussions on any other aspect of the use of AI.