- The NVIDIA H800 was launched in March 2023 and is a small version of the H100

- It is also significantly slower than the NVIDIA and AMD H200 instinct range

- These artificial limitations have forced Deepseek engineering to innovate

It was widely supposed that the United States would remain without considering the global superpower of AI, particularly after the recent announcement of President Stargate, an initiative of $ 500 billion to reinforce the infrastructure of AI in the United States. However, this week he saw a seismic change with the arrival of Deepseek from China. Developed at a fraction of the cost of his American rivals, Depseek apparently came out of nowhere and had so much impact that he cleaned $ 1 billion of the market value of US technological actions, with Nvidia the largest victim.

Obviously, everything developed in China will be very secret, but a technology article published a few days before the chat model surprised the Observers of AI gives us an idea of the technology that drives the Chinese equivalent of Chatgpt.

In 2022, the United States blocked the imported GPU imports from NVIDIA to China to adjust control over the critical technology of AI, and since then it has imposed more restrictions, but obviously that has not stopped Deep Speed. According to the document, the company trained its V3 model in a group of 2,048 GPU NVIDIA H800 – paralyzed versions of H100.

Cheap training

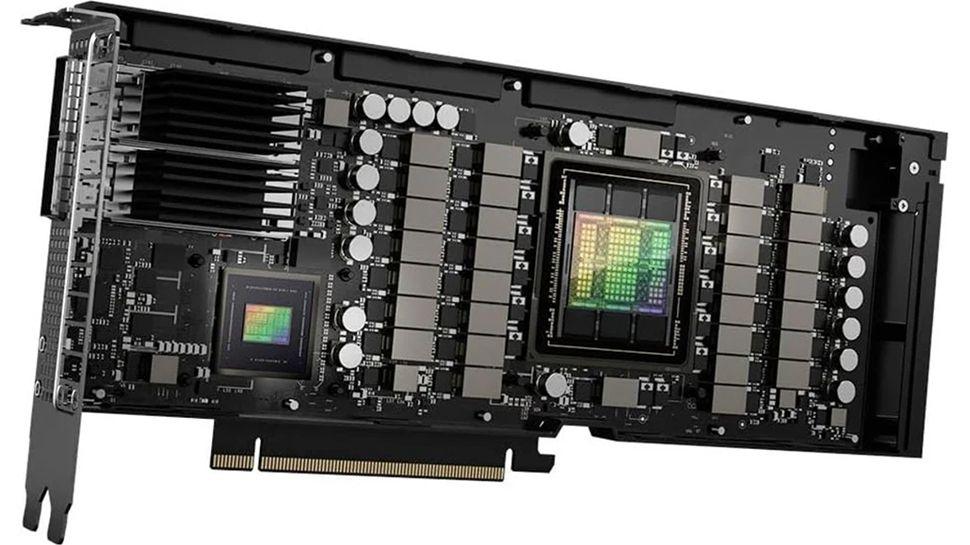

The H800 was launched in March 2023, to comply with the US export restrictions to China, and presents 80 GB of HBM3 memory with 2TB/s bandwidth.

It is left behind the new H200, which offers 141GB of HBM3E memory and bandwidth of 4.8Tb/s, and the instinct of AMD MI325X that exceeds so much to 256 GB of HBM3E memory and a 6TB/s bandwidth.

Each node in the Depseek cluster trained in the houses 8 GPUs connected by NVLink and NVSWITCH for intra-node communication, while Infiniband Interconnects manages communication between the nodes. The H800 has a lower NVLink bandwidth compared to the H100, and this, naturally, affects the performance of multi-GPU communication.

DEEKSEK-V3 required a total of 2.79 million hours of GPU for pretreatment and fine adjustment in 14.8 billion tokens, using a combination of parallelism of pipes and data, memory optimizations and innovative quantification techniques.

The next platformThat he has made a deep immersion in how Depseek works, says “at a cost of $ 2 per hour of GPU, we have no idea if that is really the predominant price in China, then it cost only $ 5.58 million to train V3”.