- The acquisition of Nvidia leads to the engineers of contributing directly to their ia ecosystem

- EMFASSSSIS GRANDS UP TO 18 TB OF MEMORY FOR GPU GROUPS

- The elastic memory fabric releases HBM for efficient time sensitive tasks

The decision of Nvidia to spend more than $ 900 million in Undergraduate was a surprise, especially because it came along with a separate investment of $ 5 billion in Intel.

According Servethehome“Underlighter has the most great technology,” probably due to its unique approach to solve one of the largest scale problems of AI: link tens of thousands of computer chips so that they can operate as a single system without wasting resources.

This agreement suggests that NVIDIA believes that solving interconnection interconnection bottlenecks is as critical as ensuring chips production capacity.

A unique approach to data tissues

The accelerated calculation cloth switch architecture (ACF-S) was built with PCIe lanes on one side and high-speed networks in the other.

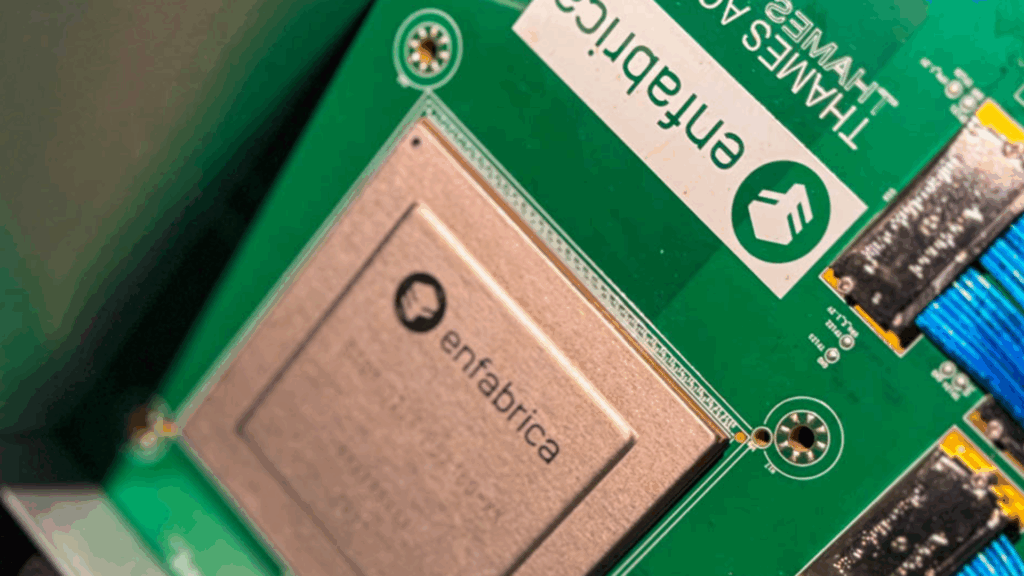

Its ACF-S “Millennium” device is a 3.2TBPS network chip with 128 PCIe lanes that can connect GPU, NIC and other devices while maintaining flexibility.

The company’s design allows the data to move between ports or through the chip with a minimum latency, unite Ethernet and PCIE/CXL technologies.

For AI groups, this means greater use and less inactive GPUs that expect data, which translates into a better return on investment for expensive hardware.

Another piece of the substance supply is its ESFASSS chassis, which uses CXL controllers to group up to 18 TB of memory for GPU groups.

This elastic memory fabric allows GPU to download data from their limited HBM memory in shared storage throughout the network.

By releasing HBM for critical tasks over time, operators can reduce tokens processing costs.

Underbracking said that the reductions could reach up to 50% and allow inference workloads to climb without the development of local memory capacity.

For large language models and other AI workloads, such capacities could become essential.

The ACF-S chip also offers high-radius multiple radio redundancy. Instead of a few 800 Gbps mass links, operators can use 32 smaller connections of 100 Gbps.

If a switch fails, only about 3% of the bandwidth is lost, instead of a large part of the network disconnected.

This approach could improve the reliability of the cluster at scale, but also increases the complexity in the design of the network.

The agreement leads to the Engineering team of Undergraduate, including CEO Rochan Sankar, directly to Nvidia, instead of leaving such innovation to rivals such as AMD or Broadcom.

While NVIDIA’s Intel participation guarantees manufacturing capacity, this acquisition directly addresses the scale limits in AI data centers.