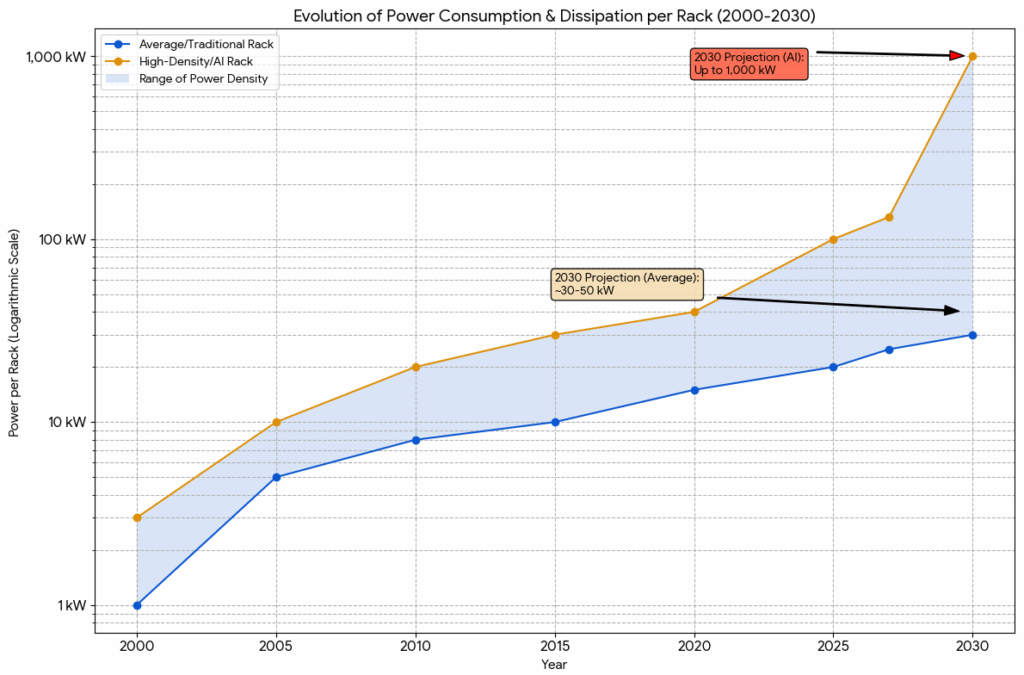

- It is projected that the racks focused on AI consume up to 1 MW each by 2030

- The average racks are expected to constantly increase 30-50KW in the same period

- Cooling and energy distribution become strategic priorities for future data centers

A long time ago the basic unit of a data center has been considered, the shelf is being remodeled by the increase in AI, and a new graph (above) of the solutions of the Lennox Data Center shows how fast this change develops.

Where once consumed only a few kilowatts, the company’s projections suggest by 2030 a AI -centered shelf could reach 1MW for energy use, a scale that was once reserved for entire facilities.

It is expected that the racks of average data centers reach 30-50kw in the same period, which reflects a constant rise in calculation density, and the contrast to the workloads of AI is surprising.

New demand and energy cooling demands

According to the projections, a single IA shelf can use the energy of its general use counterpart from 20 to 30 times, creating new demands for the delivery of energy and cooling infrastructure.

Ted Pulfer, director of Lennox Data Center Solutions, said cooling has become a center of the industry.

“The cooling, once part of ‘the support infrastructure, has now moved at the forefront of the conversation, driven by the increase in computing densities, the workloads of AI and the growing interest in approaches such as liquid cooling,” he said.

Pulfer described the level of collaboration of the industry that now takes place. “Manufacturers, engineers and end users are working more closely than ever, sharing ideas and experiencing together both in the laboratory and in real world implementations. This practical cooperation is helping to face some of the most complex cooling challenges we have faced,” he said.

The objective of delivering 1MW of energy to a shelf is also remodeling how the systems are built.

“Instead of the traditional CA of lower voltage, the industry is moving towards the high-voltage CC, such as +/- 400V. This reduces the loss of energy and the size of the cable,” Pulfer explained.

“The cooling is handled by means of a central CDU of the installation that handle the liquid flow to the collectors of racks. From there, the fluid is delivered to individual cold plates mounted directly in the hottest components of the servers.”

Most data centers today depend on cold plates, but the approach has limits. Microsoft has been trying microfluidica, where small slots are recorded in the back of the chip, allowing the refrigerant to flow directly through silicon.

In the first trials, this eliminated heat up to three times more effectively than cold plates, depending on the workload, and a reduction in the temperature of the GPU by 65%.

When combining this design with AI that maps the access points in the chip, Microsoft could direct the refrigerant with greater precision.

Although hyperscalers could master this space, Pulfer believes that smaller operators still have space to compete.

“Sometimes, the volume of orders that move through factories can create delivery bottlenecks, which opens the door so that others intervene and add value. In this market, agility and rapid rhythm innovation continue to be key strengths throughout the industry,” he said.

What is clear is that the rejection of energy and heat are now central problems, they are no longer secondary to calculate performance.

As Pulfer says, “heat rejection is essential to maintain the digital bases of the world running without problems, reliably and sustainably.”

At the end of the decade, the shape and scale of the shelf themselves can determine the future of digital infrastructure.