- Google is adding the search for gestures to its Google lens function

- The update is reaching Chrome and Google applications in iOS

- The company is also working on more artificial intelligence capabilities for the lens

If you use Chrome or Google applications on your iPhone, there is now a new way of finding information quickly based on your screen. If it works well, you could end up saving time and facilitate your searches.

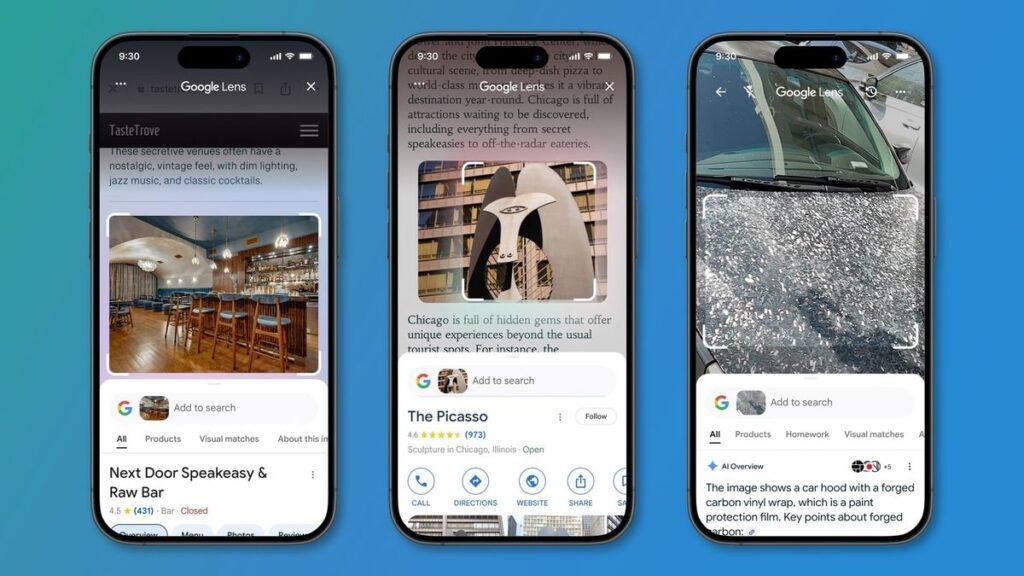

The update refers to Google Lens, which allows you to search using images instead of words. Google says you can now use a gesture to select something on the screen and then look for it. You can draw an object, for example, or touch it to select it. It works if you are reading an article, buying something new or watching a video, as Google explains.

The best iPhones have had a similar characteristic for a while, but it has always been an unofficial solution that required to use the action button and the application. Now, it is a characteristic incorporated in some of the most popular iOS applications available.

Both Chrome and Google applications already have Google glasses, but the past implementation was a bit more clumsy than today’s update. Before, I had to save an image or take a screenshot, and then upload it to Google Lens. That would potentially imply using multiple applications and it was much more a discomfort. Now, a quick gesture is all that is needed.

How to use the new Google lens on iPhone

When using Chrome or Google applications, touch the three -point menu button, then select Google Lens search screen either Look for this screenrespectively. This will put a color superposition at the top of the website that you are currently seeing.

You will see a box at the bottom of your reading screen, “circle or touch anywhere to search.” Now you can use a gesture to select an element on the screen. Doing will automatically look for the selected object using Google Lens.

The new gesture function will be extended worldwide this week and will be available in Chrome and Google applications in iOS. Google also confirmed that he will add a new lens icon in the address bar in the future, which will give it another way of using gestures on Google Lens.

Google added that he is also taking advantage of artificial intelligence (AI) to add new skills to the lens. This will allow you to look for more innovative or unique issues, and do so mean that Google’s artificial intelligence descriptions appear more frequently in their results.

This feature will also be implemented this week and will reach users in English in nations where there are descriptions of available. For now, you are ready to get to the Google application for Android and iOS first, with Chrome desktop and mobile availability that comes later.