Google wants you to know that Gemini 2.0 Flash should be your favorite AI Chatbot. The model has more speed, larger brains and more common sense than its predecessor, Gemini 1.5 Flash. After putting Gemini Flash 2.0 tested against Chatgpt, I decided to see how Google’s new favorite model compares with his older brother.

As with the previous confrontation, I configured the duel with some indications built on common forms in which anyone could use Gemini, including myself. Could Gemini 2.0 Flash offer better tips to improve my life, explain a complex topic that I know little in a way that can understand or solve the response to a complex logical problem and explain reasoning? This is how the test was.

Productive options

If there is something that AI should be able to do, it is a useful advice. Not only generic advice, but applicable and immediately useful ideas. So I asked both versions the same question: “I want to be more productive but I also have a better balance between work and life. What changes should I make to my routine?”

Gemini 2.0 was remarkably faster to respond, even if it was only a second or two faster. As for real content, both had some good advice. The 1.5 model broken four great ideas with bullet points, while 2.0 was for a longer list of 10 ideas explained in short paragraphs.

I liked some of the most specific suggestions of 1.5, such as the Pareto principle, but in addition to that, 1.5 felt like a lot of restructuring of the initial concept, while 2.0 felt that it gave me more tips of life nuanced for each suggestion. If a friend asked me for advice on the subject, I would definitely go with the 2.0 response.

What about Wi-Fi?

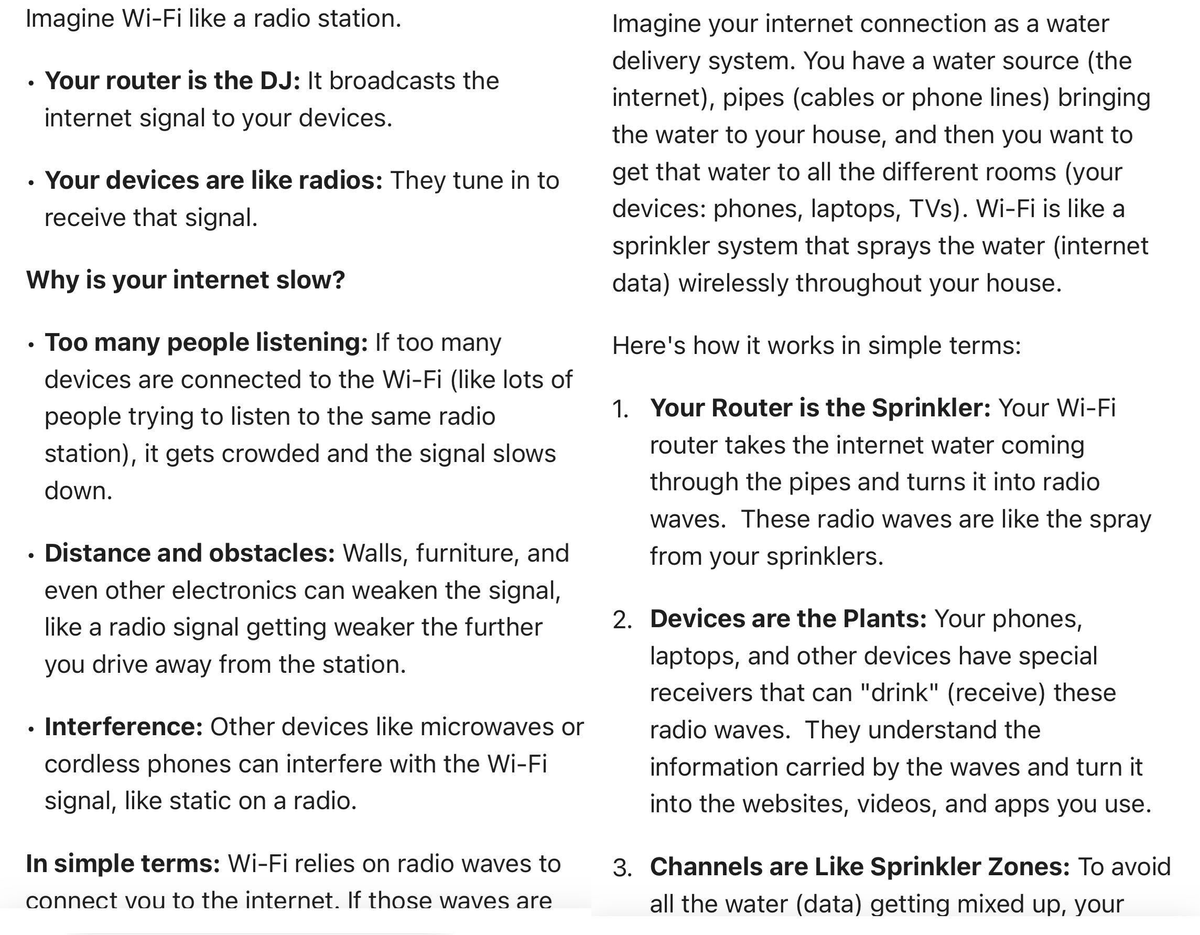

A large part of what makes an assistant to be useful not only how much he knows, it is how well he can explain things in a way that he really clicks. A good explanation is not just about listing facts; It’s about making something complex feel intuitive. For this test, I wanted to see how both versions of Gemini managed to break down a technical theme in a way that felt relevant to everyday life. I asked him: “Explain how Wi-Fi works, but in a way that makes sense for someone who just wants to know why his internet is slow.”

Gemini 1.5 was comparing Wi-Fi with the radio, which is more a description of the analogy that he suggested that he was doing. Calling the router the DJ is also a kind of exaggeration, although the advice to improve the signal was at least consistent.

Gemini 2.0 used a more elaborate metaphor that involves a water delivery system with devices such as plants that receive water. The AI extended the metaphor to explain what could be causing problems, such as too many “plants” for available water and obstructed pipes that represent suppliers problems. The comparison of “sprinkler interference” was much weaker, but as with version 1.5, Gemini 2.0 had practical advice to improve the Wi-Fi signal. Despite being much longer, the 2.0 response emerged a little faster.

Logic pump

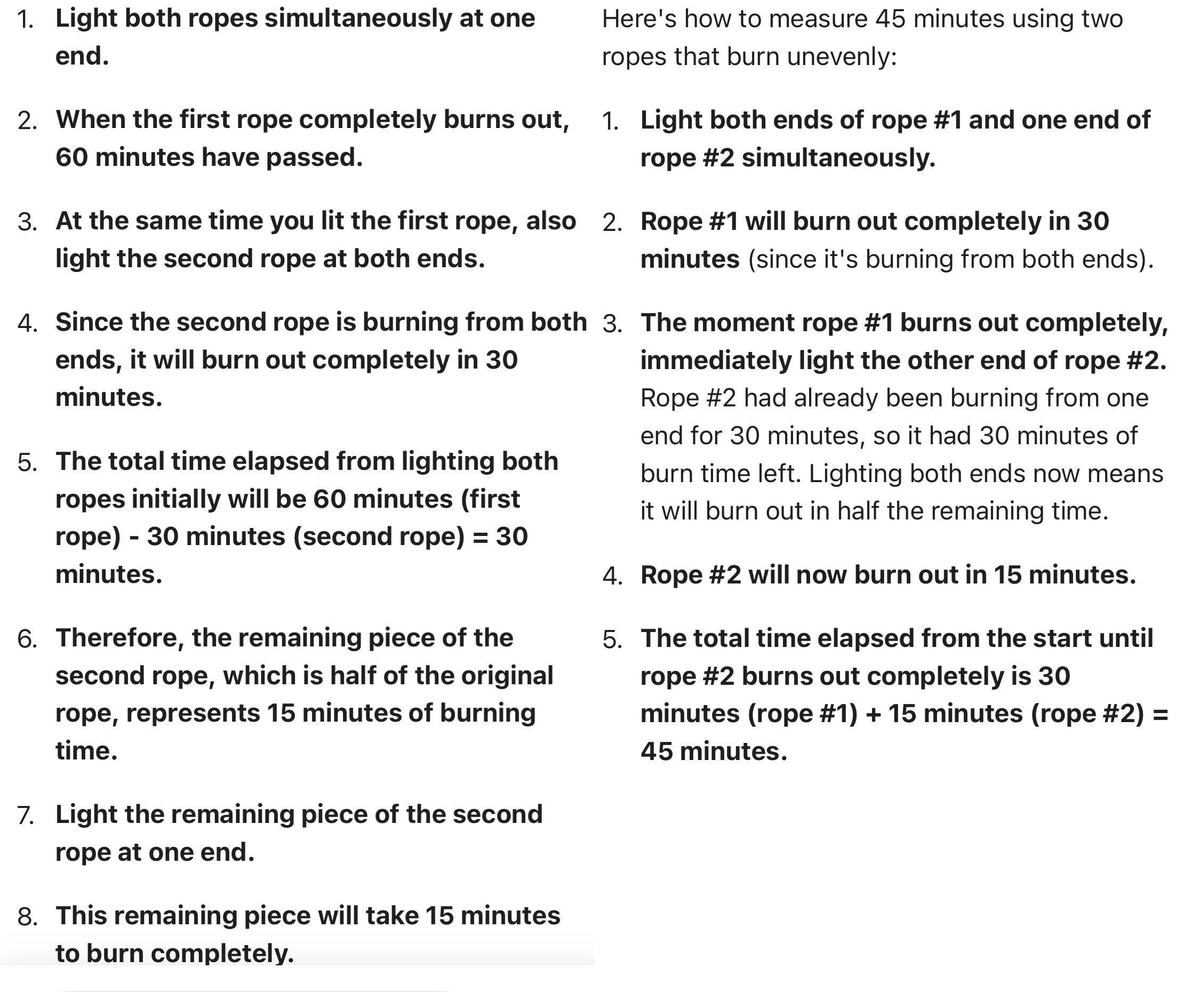

For the last test, I wanted to see how well both versions handled logic and reasoning. It is assumed that AI models are good at the puzzles, but it is not just about obtaining the correct answer: it is if they can explain why an answer is correct in a way that really makes sense. I gave them a classic puzzle: “You have two strings. Each one has been burning exactly one hour, but they don’t burn at a constant pace. How exactly 45 minutes are measured?”

Both models technically gave the correct answer on how to measure time, but in a way as different as possible within the limitations of the puzzle and be correct. Gemini 2.0’s response is shorter, ordered in a way that is easier to understand and is clearly explained despite its brevity. Gemini 1.5’s response required a more careful analysis, and the steps felt a bit out of service. The writing was also confusing, especially when he said to light the remaining rope “at one end” when the end of which he is not currently igniting.

For such a contained response, Gemini 2.0 stood out as notably better to solve this type of logical puzzle.

Gemini 2.0 by speed and clarity

After testing the indications, the differences between Gemini 1.5 Flash and Gemini 2.0 Flash were clear. Although 1.5 was not necessarily useless, it seemed to fight with specificity and make useful comparisons. The same goes for its logical breakdown. If applied to the computer code, it would have to do a lot of cleaning for an operating program.

Gemini 2.0 Flash was not only faster but more creative in their answers. It seemed much more capable of imaginative analogies and comparisons and much clearer to explain its own logic. That doesn’t mean it’s perfect. The water analogy fell apart a bit, and the productivity council could have used more concrete examples or ideas.

That said, it was very fast and could clarify those problems with a little round trip conversation. Gemini 2.0 Flash is not the final and perfect assistant, but it is definitely a step in the right direction for Google, since it strives to overcome and rivals like Chatgpt.