- ASICs are much more efficient than GPUs for inference, as is cryptocurrency mining.

- Inference AI Chip Market Expected to Grow Exponentially by the End of This Decade

- Hyperscalers like Google have already jumped on the bandwagon

Nvidia, already a leader in AI and GPU technologies, is moving into the application-specific integrated circuit (ASIC) market to address growing competition and changing trends in AI semiconductor design.

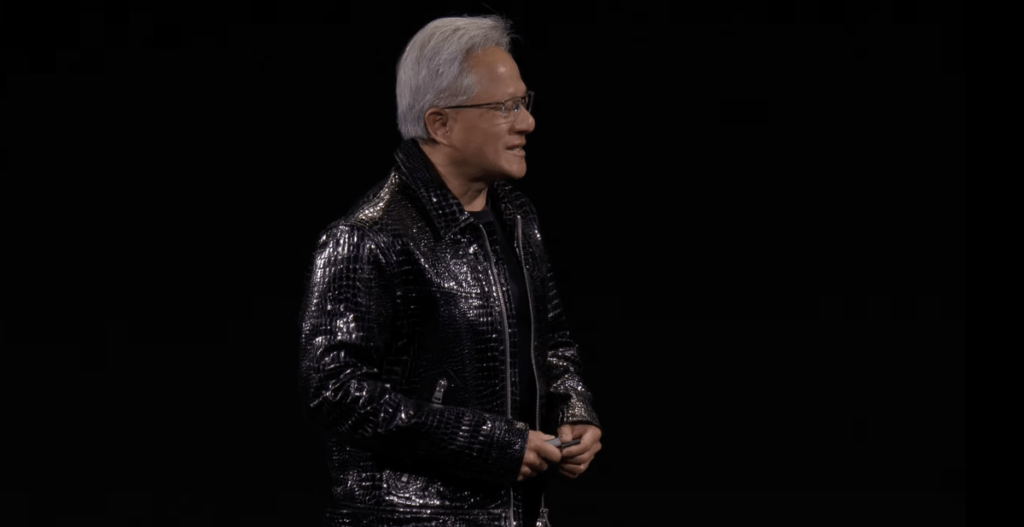

The global rise of generative AI and large language models (LLM) has significantly increased demand for GPUs, and Nvidia CEO Jensen Huang confirmed that in 2024 the company will hire 1,000 engineers in Taiwan.

Now, as Taiwan newspaper reported Business hours (originally published in Chinese), the company has now established a new ASIC department and is actively recruiting talents.

The rise of inference chips

Nvidia H-series GPUs optimized for AI learning tasks have been widely adopted for training AI models. However, the AI semiconductor market is experiencing a shift toward inference chips, or ASICs.

This increase is driven by demand for chips optimized for real-world AI applications, such as large language models and generative AI. Unlike GPUs, ASICs offer superior efficiency for inference tasks as well as cryptocurrency mining.

According to Verified Market Research, the inference AI chips market is projected to rise from a valuation of $15.8 billion in 2023 to $90.6 billion in 2030.

Major tech players, including Google, have already adopted custom ASIC designs in its “Trillium” AI chip, which will be widely available in December 2024.

The shift toward custom AI chips has intensified competition among semiconductor giants. Companies like Broadcom and Marvell have gained relevance and stock market value as they collaborate with cloud service providers to develop specialized chips for data centers.

To stay ahead, Nvidia’s new ASIC department is focused on leveraging local expertise by hiring leading companies like MediaTek.