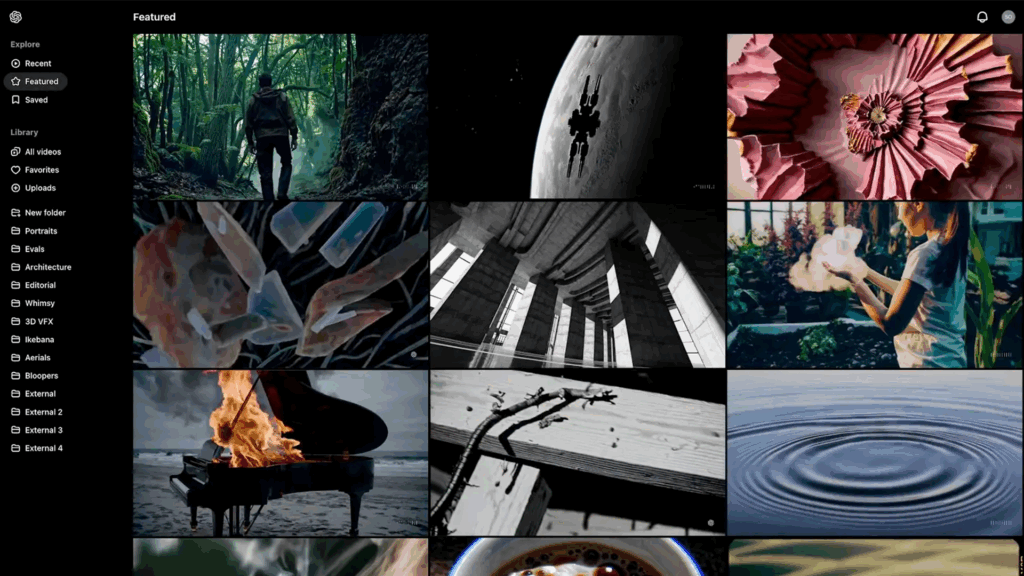

- The Sora 2 AI video model is expected to work soon

- Sora 2 will face a tough competition of Google’s model 3

- I see 3 already offers features that Sora does not do so, and OpenAi will need to improve both what Sora can do and how easy it is to use to attract potential customers

Openai seems to be finishing plans to launch Sora 2, the next iteration of its video text model, based on references seen on OpenAi servers.

Nothing has been officially confirmed, but there are signs that Sora 2 will be an important update directed directly to the video model AI I see 3 AI of Google. It is not just a career to generate prettier pixels; It is about the sound and the experience of producing what the user imagines when writing a notice.

Sora de Openai impressed many when he debuted with his high quality images. However, they were silent movies. But, when I see 3 he debuted this year, he showed short clips with baked and synchronized environmental speech and audio. Not only could you see a man pouring coffee in slow motion, but you can also hear the soft liquid splash, ceramic tintine and even the diner’s buzz around the digital character.

To make Sora 2 stand out as more than a minor option to see 3, OpenAi will need to discover how to sew credible voices, sound effects and environmental noise in even better versions of their images. Obtaining the right audio, particularly lip synchronization, is complicated. Most AI video models can show you a face that says words. The magic trick is making them seem that those words really came from that face.

It is not that I see 3 is perfect to combine the sound in the image, but there are examples of videos with audio coordination to a surprisingly adjusted mouth, background music that coincides with the mood and the effects that fit the intention of the video.

According to a maximum of eight seconds per video limits the scope of success or failure, but the loyalty to the scene is necessary before considering the duration. And it is difficult to deny that you can make videos that are seen and sound like real cats that jump high immersion in a pool. Although if Sora 2 can extend to 30 seconds or more with constant quality, it is easy to see that attracts users looking for more space to create ia videos.

Sora 2 movie mission

OpenAi sora can extend up to 20 seconds or more high quality video. And as integrated into chatgpt, you can do it part of a larger project. This flexibility is significant to help Sora stand out, but the absence of audio is remarkable. To compete directly with I see 3, Sora 2 will have to find your voice. Not only do I find it, but gently weave it in the videos it produces. Sora 2 could have a large audio, but if you cannot overcome the audio in the perfect way of seeing 3 it connects with its images, it may not matter.

At the same time, making Sora 2 too good could cause your own problems. With each new generation of AI video model, there is more concern to blur the line with reality. Sora and I see 3 do not allow indications involving real people, violence or content with copyright. But add audio offers a completely new dimension of scrutiny about the origin and use of realistic voices.

The other big question is the price. Google has I see 3 behind the Gemini Advanced Paywall, and you really need to subscribe to level AI Ultra of $ 250 per month if you want to use I see 3 all the time. Operai could group access to Sora 2 at the chatgpt plus and pro levels in a similar way, but it can offer more to the cheapest level, it is likely to quickly expand its user base.

For the average person, the AI video tool to which they resort will depend on that price, as well as the ease of use, as well as the characteristics and quality of the video. There is much to do Openai must do if Sora 2 will be more than a silent mistake in AI’s career, but we seem to discover how well compete soon.