- Nvidia Rubin DGX SuperPOD delivers 28.8 exaflops with just 576 GPUs

- Each NVL72 system combines 36 Vera CPUs, 72 Rubin GPUs and 18 DPUs

- Added NVLink performance reaches 260 TB/s per DGX rack for greater efficiency

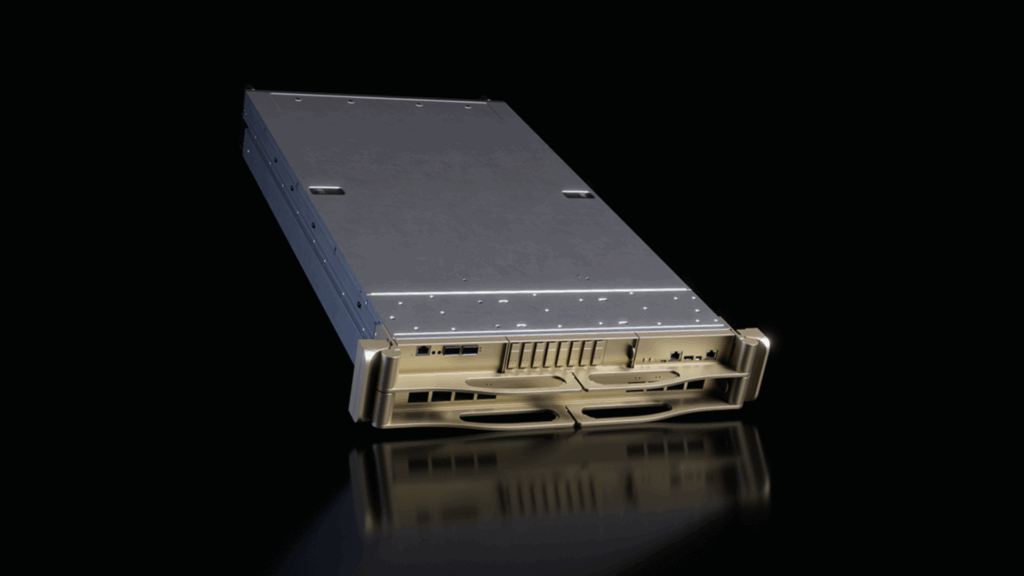

At CES 2026, Nvidia unveiled its next-generation DGX SuperPOD powered by the Rubin platform, a system designed to deliver extreme AI computing in dense, integrated racks.

According to the company, the SuperPOD integrates multiple Vera Rubin NVL72 or NVL8 systems into a single coherent AI engine, supporting large-scale workloads with minimal infrastructure complexity.

With liquid-cooled modules, high-speed interconnects and unified memory, the system is aimed at institutions seeking maximum AI performance and reduced latency.

Rubin-based computer architecture

Each DGX Vera Rubin NVL72 system includes 36 Vera CPUs, 72 Rubin GPUs, and 18 BlueField 4 DPUs, delivering a combined FP4 performance of 50 petaflops per system.

NVLink’s aggregate performance reaches 260 TB/s per rack, allowing all memory and compute space to operate as a single coherent AI engine.

The Rubin GPU features a third-generation Transformer Engine and hardware-accelerated compression, enabling inference and training workloads to be processed efficiently at scale.

Connectivity is enhanced by Spectrum-6, Quantum-X800 InfiniBand, and ConnectX-9 SuperNIC Ethernet switches, which support high-speed deterministic AI data transfer.

Nvidia’s SuperPOD design emphasizes end-to-end network performance, ensuring minimal congestion in large AI clusters.

Quantum-X800 InfiniBand offers low latency and high throughput, while Spectrum-X Ethernet handles east-west AI traffic efficiently.

Each DGX rack incorporates 600TB of fast memory, NVMe storage, and integrated AI contextual memory to support training and inference processes.

The Rubin platform also integrates advanced software orchestration through Nvidia Mission Control, streamlining cluster operations, automated recovery, and infrastructure management for large AI factories.

A DGX SuperPOD with 576 Rubin GPUs can achieve 28.8 FP4 Exaflops, while individual NVL8 systems deliver 5.5 times higher FP4 FLOPS than previous Blackwell architectures.

In comparison, Huawei’s Atlas 950 SuperPod claims 16 FP4 Exaflops per SuperPod, meaning Nvidia achieves higher efficiency per GPU and requires fewer units to achieve extreme computing levels.

Rubin-based DGX clusters also use fewer nodes and cabinets than Huawei’s SuperCluster, which scales to thousands of NPUs and multiple petabytes of memory.

This performance density allows Nvidia to compete directly with Huawei’s projected computing output while limiting space, power, and interconnect overhead.

The Rubin platform unifies computing, networking, and AI software into a single stack.

Nvidia AI Enterprise software, NIM microservices, and mission-critical orchestration create a cohesive environment for long-context reasoning, agent AI, and multimodal model deployment.

While Huawei primarily scales through hardware numbers, Nvidia emphasizes rack-level efficiency and tightly integrated software controls, which can reduce operating costs for industrial-scale AI workloads.

TechRadar will cover this year’s edition extensively CESand will bring you all the important announcements as they happen. Go to our CES 2026 News page for the latest stories and our hands-on verdicts on everything from wireless TVs and foldable screens to new phones, laptops, smart home devices and the latest in artificial intelligence. You can also ask us a question about the show on our CES 2026 Live Q&A and we will do our best to answer it.

And don’t forget follow us on tiktok and WhatsApp For the latest from the CES fair!