- HBM 3D Design on GPU Achieves Record Compute Density for Demanding AI Workloads

- Maximum GPU temperatures exceeded 140°C without thermal mitigation strategies

- Halving the GPU clock rate reduced temperatures but slowed down AI training by 28%

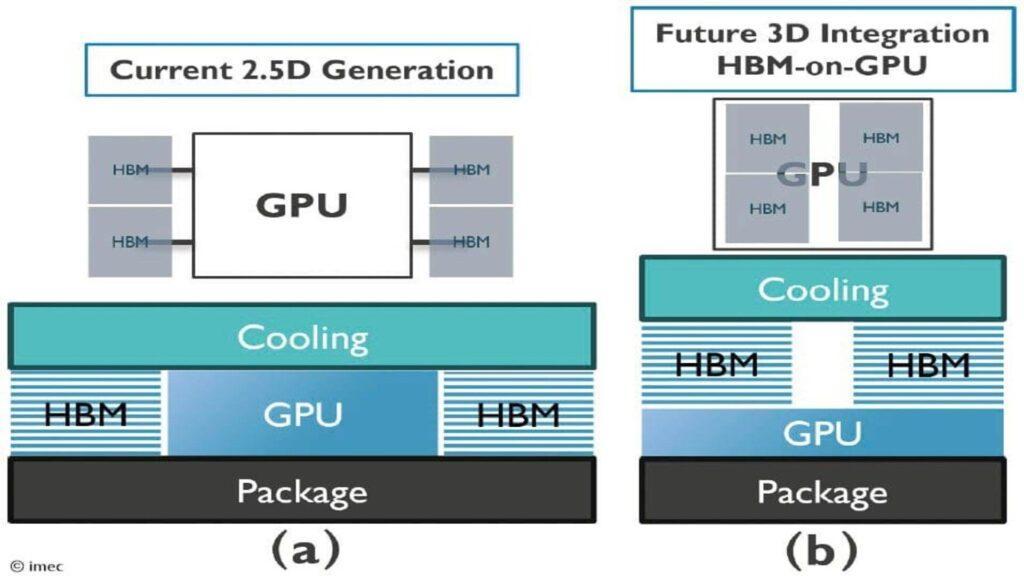

Imec presented an examination of an HBM 3D design on GPUs aimed at increasing compute density for demanding AI workloads at the 2025 IEEE International Electronic Devices Meeting (IEDM).

The thermal system technology co-optimization approach places four high-bandwidth memory stacks directly on top of a GPU via microbump connections.

Each stack consists of twelve hybrid-link DRAM dies and cooling is applied on top of the HBMs.

Thermal Mitigation Attempts and Performance Tradeoffs

The solution applies power maps derived from industry-relevant workloads to test how the setup responds under realistic AI training conditions.

This 3D layout promises a jump in computing density and memory per GPU.

It also offers higher GPU memory bandwidth compared to 2.5D integration, where HBM stacks sit around the GPU on a silicon interposer.

However, thermal simulations reveal serious challenges for HBM 3D design on GPUs.

Without mitigation, maximum GPU temperatures reached 141.7°C, well above operating limits, while the 2.5D baseline maxed out at 69.1°C under the same cooling conditions.

Imec explored technology-level strategies, such as HBM stack fusion and thermal silicon optimization.

System-level strategies included dual-sided cooling and GPU frequency scaling.

Reducing the GPU clock frequency by 50% reduced maximum temperatures to below 100°C, but this change slowed down AI training workloads.

Despite these limitations, Imec maintains that the 3D structure can offer greater compute density and performance than the 2.5D reference design.

“Halving the GPU core frequency brought the maximum temperature from 120°C to below 100°C, achieving a key goal for memory performance. Although this step comes with a 28% workload penalty…” said James Myers, Systems Technology Program Manager at Imec.

“…the overall package outperforms the 2.5D baseline thanks to the higher performance density offered by the 3D configuration. We are currently using this approach to study other GPU and HBM configurations…”

The organization suggests this approach could support thermally resilient hardware for AI tools in dense data centers.

Imec presents this work as part of a broader effort to link technological decisions with system behavior.

This includes the Cross-Technology Co-Optimization (XTCO) program, launched in 2025, which combines STCO and DTCO mindsets to align technology roadmaps with system scaling challenges.

Imec said XTCO enables collaborative problem solving for critical bottlenecks across the semiconductor ecosystem, including systems and fabless companies.

However, such technologies are likely to remain confined to specialized facilities with controlled thermal and power budgets.

Through TechPowerUp

Follow TechRadar on Google News and add us as a preferred source to receive news, reviews and opinions from our experts in your feeds. Be sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp also.