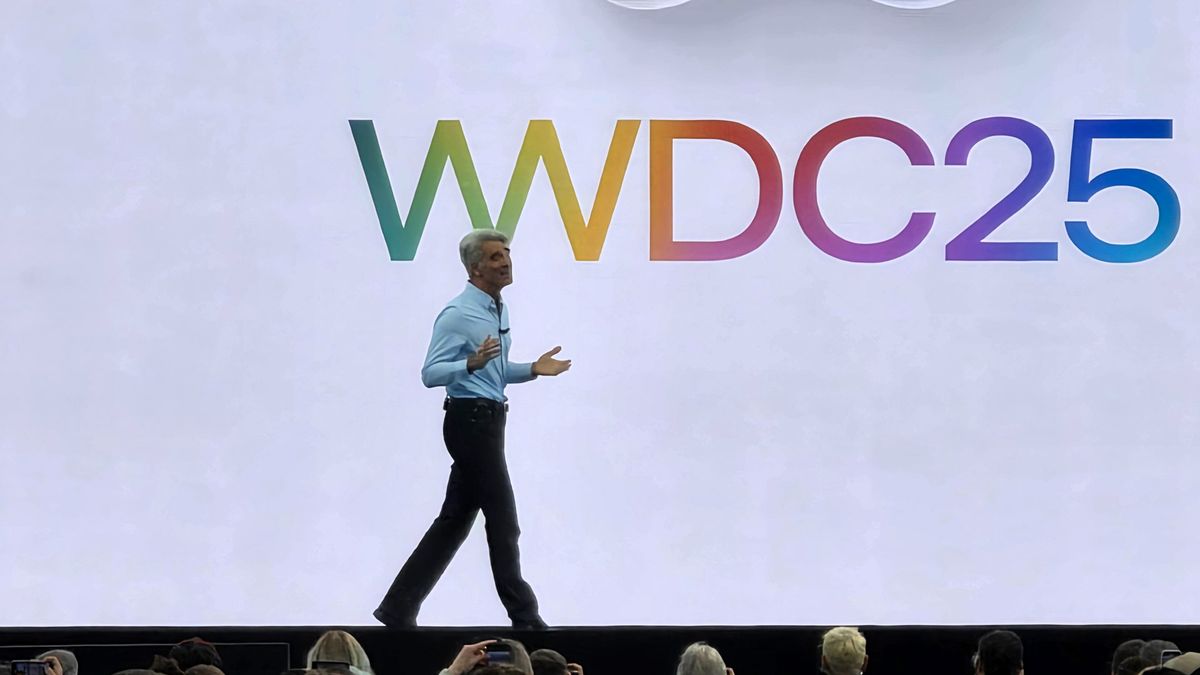

It cannot be denied that Apple’s Digital Chatbot did not occupy exactly a place of honor in the key note WWDC 2025 this year. Apple mentioned it and reiterated that it was taking more than what I had planned to bring all the Siri that promised a year ago, saying that Apple’s complete integration would arrive “next year.”

Since then, Apple has confirmed that this means 2026. That means that we will not see the type of deep integration that would have allowed Siri to use what you knew about you and your running iPhone iOS to become a better digital partner in 2025. It will not be, as part of the newly announced, iOS 26, use the applications attempts to understand what is happening on the screen and take measures on your name on your name.

I have my theories about the reason for the delay, most of which revolve around the tension between delivering a rich experience of AI and the basic principles of Apple with respect to privacy. They often seem for cross purposes. This, however, are the conjectures. Only Apple can tell us exactly what is happening, and now they have done it.

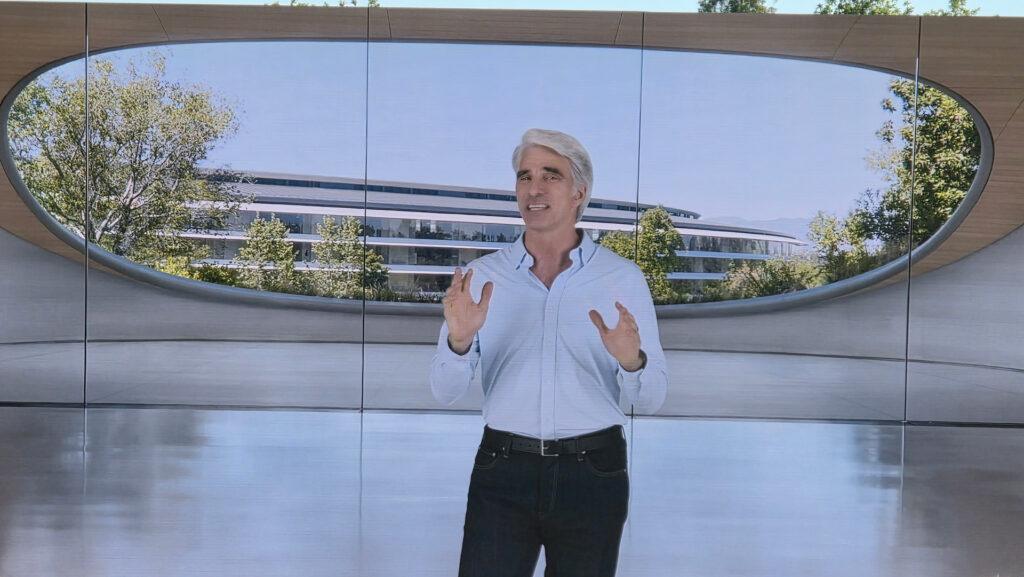

I, together with the Global Editor of Tom’s Guide, Mark Spaonauer, sat down shortly after the main note with the Senior Vice President of Apple software engineering, Craig Federight and the Vice President of Global Apple Greg Joswiak global marketing for a broad discussion of podcast about practically everything Apple made known during its opening of 90 minutes.

We start asking Federight about what Apple offered with respect to Apple Intelligence, as well as the state of Siri, and what iPhone users could expect this year or next. Federight was surprisingly transparent, offering a window to Apple’s strategic thinking when it comes to Apple Intelligence, Siri and AI.

Far from nothing

Federight began guiding us everything Apple has delivered with Apple’s intelligence so far and, to be fair, it is a considerable amount

“We were very focused on creating a wide platform for personal experiences really integrated in the operating system.” He recalled Federight, referring to Apple’s original intelligence announcement on WWDC 2024.

At that time, Apple demonstrated writing tools, summaries, notifications, movies of movies, semantic search for the photo library and photo cleaning. He delivered all those characteristics, but even when Apple was building those tools, he acknowledged, Federight told us, that “we could, on that basis of large language models on devices, the computation of the private cloud as the basis for more intelligence, even more intelligence, even more intelligence, [and] Semantic indexation on the device to recover maintaining knowledge, building a better Siri. “

Overconfidence?

A year ago, Apple’s confidence in his ability to build such Siri led him to demonstrate a platform that could handle a more conversational context, errors, type to Siri and a significantly redesigned IU. Again, all the things Apple delivered.

“We also talk about […] Things how to invoke a broader range of actions throughout its device through the intention of applications than Siri orchestra to let do more things, “Federight added.” We also talk about the ability to use the personal knowledge of that semantic index, so if you ask for things like “What is that podcast, that” Joz “sent me?” That we could find it, either in your messages or in your email, and call it, and then even act on it using those applications attempts.

This is the known story. Apple promised and did not deliver too much, by not delivering an Apple intelligence update of the end of the year Siri of the end of the year in 2024 and admitting for the spring of 2025 that would not be ready in the short term. As for why it happened, it has been, until now, a mystery. Apple does not have the habit of demonstrating technology or products that you do not know with certainty that you can deliver on time.

Federight, however, explained in some detail where things went wrong, and how Apple progresses from here.

“We discovered that when we were developing this characteristic that we had, in reality, two phases, two versions of the final architecture that we were going to create,” he explained. “Version one that we had working here at the time we were approaching the conference, and we had, at that time, a great confidence that we could deliver it. We thought of December, and if not, we thought about spring, until we announced it as part of WWDC. Because we knew that the world wanted a really complete image of” What is thinking about the implications of Apple of Intelligence and where is it? “” “” “” “” “” “”. “” “” “” “” “”. “” “”

A history of two architectures

While Apple was working in a V1 of the Siri architecture, I was also working on what Federight called V2, “an extreme architecture to the deepest end that we knew was ultimately what we wanted to create, to reach a complete set of capabilities that we wanted for Siri.”

What everyone saw during the WWDC 2024 were videos of that V1 architecture, and that was the basis of the work that began seriously after the revelation of WWDC 2024, in preparation for the complete launch of Apple Intelligence Siri.

“We established for months, making it work better and better in more applications, better and better to do a search,” Federight added. “But fundamentally, we discovered that the limitations of architecture V1 did not take us to the level of quality that we knew that our clients needed and expected. We realized that the V1 architecture, you know, we could push, push and push and spend more time, but if we tried Have the architecture of V2 to the architecture of V2.

“As soon as we realized that, and that was during spring, we let the world know that we will not be able to get that out, and we would really continue working on the new architecture and freeing something.”

We realized that […] If we tried to expel it in the state in which it was going to be, it would not meet the expectations of our clients or the Apple standards, and that we had to move on to architecture V2.

Craig Federight, Apple

However, that switch, and what Apple learned along the way, meant that Apple would not make the same mistake again, and promised a new Siri for a date that I could not guarantee to hit. Instead. Apple does not “predominate an appointment,” Federight explained, “until we have the V2 architecture that delivers not only in a way that we can demonstrate for all of you …”

He then joked that, while, in reality, “he could” demonstrate a V2 model that works, he was not going to do it. Then he added, more seriously, “we have, you know, architecture V2, of course, working internally, but we are not yet to the point that it delivers the level of quality that I think makes it a great characteristic of Apple, so we are not announcing the date for when it happens. We announce the date on which we are ready to sow it, and all are ready to experience it.”

I asked Federight if, for architecture V2, I was talking about a total reconstruction of Siri, but Federight disabled me of that notion.

“I must say that architecture v2 is not, it was not a star. Now.”

A different AI strategy

Some could see Apple’s failure in delivering the full Siri in its original schedule as a strategic stumbling. But Apple’s approach for AI and the product is also completely different from Openai or Google Gemini. It does not revolve around a singular product or a powerful chatbot. Siri is not necessarily the centerpiece that we all imagine.

Federight does not argue that “AI is this transformation technology […] Everything that is emerging from this architecture will have an impact of decades throughout the industry and the economy, and like the Internet, just like mobility, and will touch Apple’s products and touch experiences that are outside Apple products. “

Apple clearly wants to be part of this revolution, but in its terms and so that the majority benefit its users while, of course, it protects their privacy. However, Siri was never the final game, as Federight explained.

Ai is this transformation technology […] And Apple’s products will touch and touch experiences that are outside Apple products. “

Craig Federight, Apple

“When we started with Apple’s intelligence, we were very clear: it was not just about building a chatbot. Then, apparently, when some of these Siri capabilities that I mentioned did not appear, people said: ‘What happened, Apple? I thought you were going to give you your chatbot. That was never the goal, and it does not remain our main objective.”

So what is the goal? I think it can be quite obvious of the key note WWDC 2025. Apple intends to integrate Apple’s intelligence into all its platforms. Instead of addressing a unique application as a chatgpt for your needs of AI, Apple is putting it, in a way, everywhere. It is done, explains Federight, “in a way that you find where you are, it is not that you go to a chat experience to do things.”

Apple understands the charm of conversation bots. “I know that many people consider that it is a really powerful way of gathering their thoughts, rain of ideas […] So, of course, these are great things, “says Federight.” Are they the most important thing for Apple to develop? Well, time will say where we are going there, but that is not the main thing we set out to do at this time. “

Return soon to see a link to the Techradar and Tom’s Guide podcast with the full interview with Federight and Joswiak.