- Tiiny AI Pocket Lab runs large models locally, avoiding cloud dependency

- Mini PC runs advanced inference tasks without discrete GPU support

- Models with parameters from 10B to 120B work offline with a power of 65 W

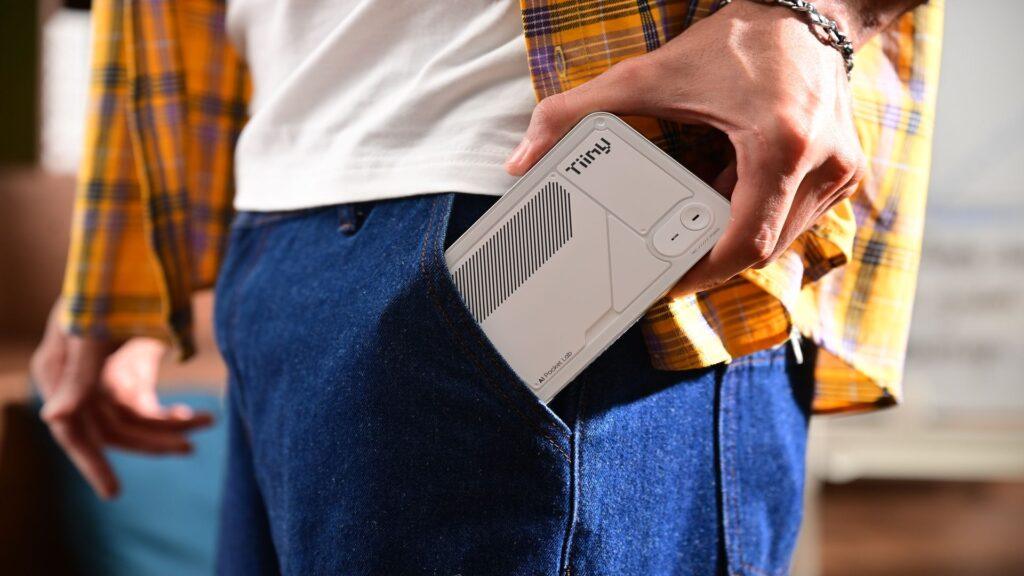

Tiiny, an American startup, has launched AI Pocket Lab, a pocket-sized AI supercomputer capable of running large language models locally.

The device is a mini PC designed to run advanced inference workloads without access to the cloud, external servers, or discrete accelerators.

The company claims that all processing remains offline, eliminating network latency and limiting external data exposure.

Designed to run large models without the cloud

“Cloud AI has brought remarkable progress, but it has also created dependency, vulnerability and sustainability challenges,” said Samar Bhoj, GTM Director at Tiiny AI.

“With Tiiny AI Pocket Lab, we believe that intelligence should not belong in data centers, but in people. This is the first step in making advanced AI truly accessible, private and personal, by bringing the power of big cloud models to every individual device.”

The Pocket Lab focuses on large personal models designed for complex reasoning and long-context tasks while operating on a limited power of 65 W.

Tiiny claims consistent performance for models in the 10B to 100B parameter range, with support extending up to 120B.

This upper limit is close to the capacity of leading cloud systems, allowing advanced reasoning and extended context to run locally.

Guinness World Records has reportedly certified the hardware for running the local 100B class model.

The system uses a 12-core ARMv9.2 CPU combined with a custom heterogeneous AI module delivering approximately 190 TOPS of compute.

The system includes 80GB of LPDDR5X memory along with a 1TB SSD, and total power consumption remains within the 65W system envelope.

Its physical size is more like a large external drive than a workstation, reinforcing its pocket-oriented brand.

While the specs resemble a Houmo Manjie M50-style chip, no independent real-world performance data is yet available.

Tiiny also emphasizes an open source ecosystem that supports one-click installation of major agent models and frameworks.

The company says it will provide ongoing updates, including what it describes as OTA hardware updates.

This formulation is problematic, since wireless mechanisms are traditionally applied to software.

The statement suggests imprecise wording or a marketing error rather than a literal hardware modification.

The technical approach relies on two software-driven optimizations rather than scaling raw silicon performance.

TurboSparse focuses on selectively activating neurons to reduce the cost of inference without altering the model structure.

PowerInfer distributes workloads across heterogeneous components, coordinating the CPU with a dedicated NPU to approach server-grade performance with lower power consumption.

The system does not include a discrete GPU, and the company argues that careful programming eliminates the need for expensive accelerators.

These claims indicate that efficiency gains, rather than brute-forcing hardware, serve as the primary differentiator.

Tiiny AI positions Pocket Lab as a response to the sustainability, privacy, and cost pressures affecting centralized AI services.

Running large language models locally could reduce recurring cloud expenses and limit the exposure of sensitive data.

However, claims about capacity, server-grade performance, and smooth scaling on such limited hardware remain difficult to independently verify.

Through TechPowerUp

Follow TechRadar on Google News and add us as a preferred source to receive news, reviews and opinions from our experts in your feeds. Be sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp also.